On both subset of Flickr-SoundNet, our proposed method outperforms current methods.

|

|

|

|

|

|

|

|

|

Code [GitHub] |

Paper [arXiv] |

Cite [BibTeX] |

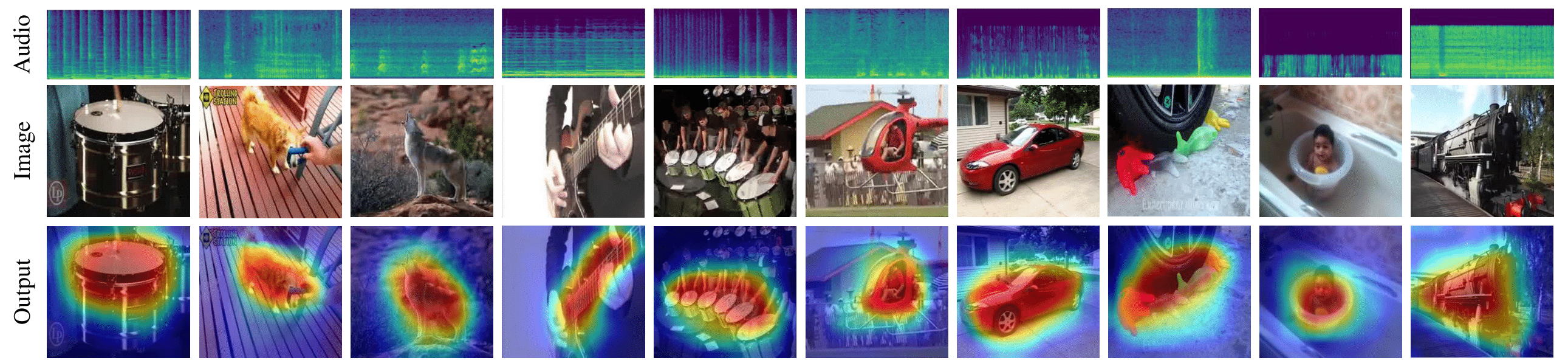

Image Visualization

Video demos

(i) Audio retrieval

1. Query audio

Retrieved audios

2. Query audio

Retrieved audios

(ii) Audio-image cross-modal retrieval

1. Query audio

Retrieved images

2. Query audio

Retrieved images

3. Query audio

Retrieved images

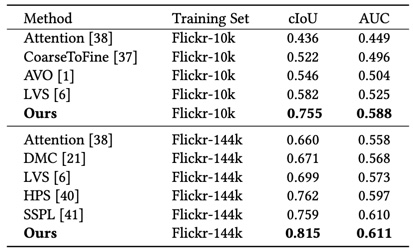

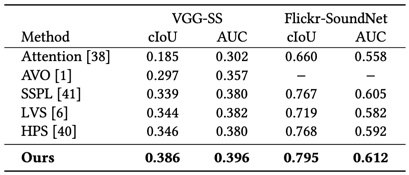

R1: Visual sound source localisation

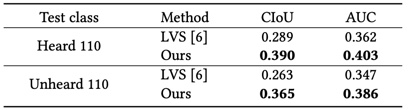

On both subset of Flickr-SoundNet, our proposed method outperforms current methods.

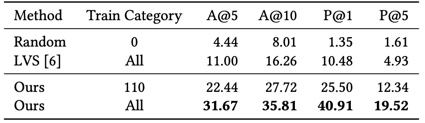

On VGGSound dataset, our proposed method outperforms current SOTAs by a significant margin.

Both approaches have experienced performance drop on unheard categories. However, our proposed model still maintains high localisation accuracy in this open set evaluation.

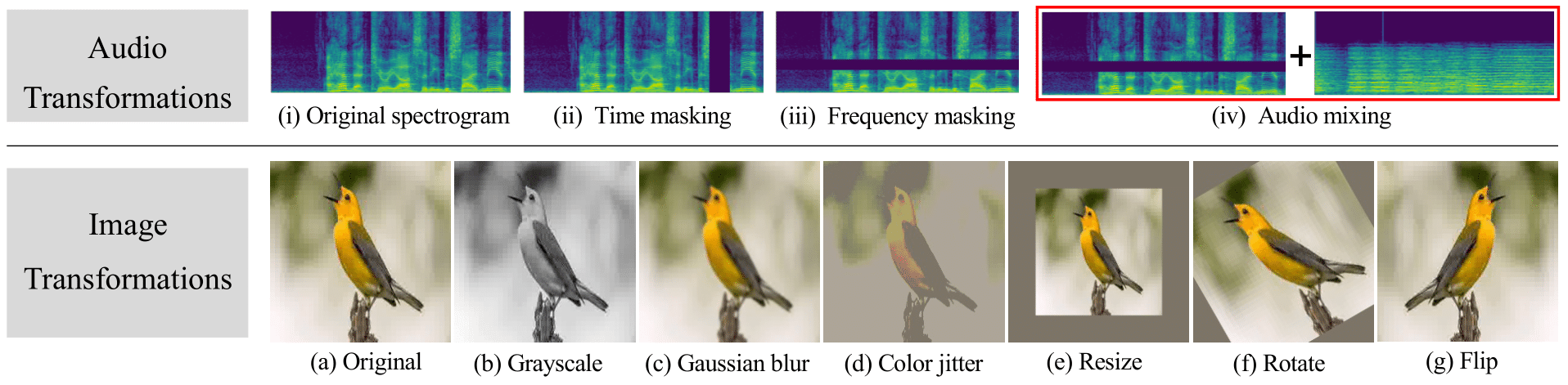

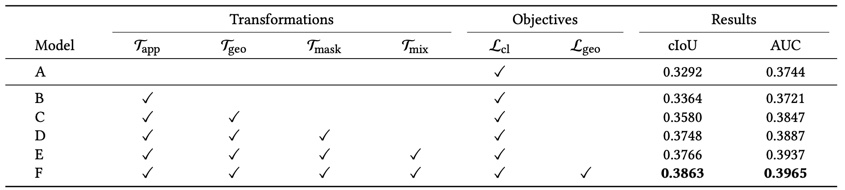

R2: Analysis of data transfomations

We perform the ablation study to show the usefulness of the data transfomations and the equivariance regularisation in the proposed framework.

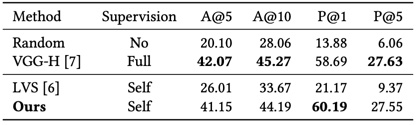

R3: Audio retrieval and cross-modal retrieval

Our model trained on sound source localisation task has learnt powerful representations, which demonstrates strong retrieval performance comparable to supervised baseline (VGG-H).

Our model shows strong cross-modal retrieval performances which surpasses baseline models by a large margin.

Based on a template by Phillip Isola and Richard Zhang.